Tulpas: invisible friends in the brain.

By Jonas Mago

Published on June 1, 2023

| Last updated on

April 23, 2024

Tulpas: invisible friends in the brain

⚠️ TLDR; check out my to-do list.

⚠️ Practical tipps and tricks I learned during this brainhack school.

ℹ️ I received great feedback during my final presentation of this project! I haven’t been able to incorporate all of the feedback yet, however, check out a full list of feedback here.

ℹ️ In the spirit of open science, I have successfully contributed to the nilearn project by correcting a typo. See my merged pull request.

Background

Tulpamancy is an intriguing psychological and sociocultural phenomenon that presents novel opportunities for neuroimaging research. Derived from Tibetan Buddhist practices, the term "Tulpa" refers to a self-induced, autonomous mental companion created through focused thought and mental conditioning. Individuals who engage in this practice, known as Tulpamancers, report a range of experiences that suggest Tulpas operate independently of the host's conscious control, often possessing their distinct personality, preferences, and perceptual experiences.Tulpas are not viewed as hallucinations but rather as fully-fledged conscious entities sharing the same neurological space as the host. The creation and interaction with Tulpas require intense concentration and visualization, often leading to the creation of a mentally constructed realm, called a “wonderland,” where Tulpamancers and their Tulpas can interact.

Tulpamancy provides a unique framework to explore the capacities of the human mind in creating and perceiving conscious entities. This has substantial implications for our understanding of consciousness, agency, and the malleability of perception. Moreover, it brings forward questions about the neurological underpinnings of these phenomena. How does the brain of a Tulpamancer accommodate multiple conscious entities? Can we observe a distinct neurophysiological pattern associated with the presence of a Tulpa

Through neuroimaging, we aim to investigate these questions, seeking to shed light on the intriguing practice of Tulpamancy and its implications for our understanding of the human mind and consciousness.

To get a better understanding of Tulpamancy you can check the following video. The video summarises the most important tipps for creating tulpas. These tipps are sourced from the reddit thread r/tulpas.

Data

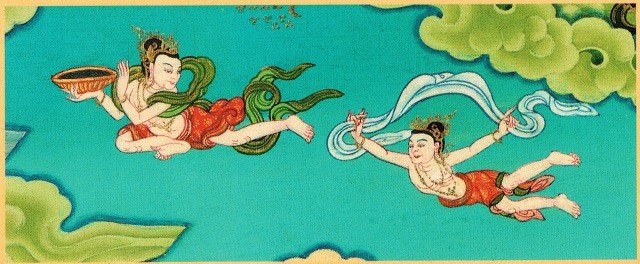

The data used for this project was collected by Dr. Michael Lifshitz (McGill) from 2020 to 2022 at Stanford University, California, USA. The dataset comprises fMRI scans of 22 expert Tulpamancers. The data was aquired during a sentence completion task originally designed by Walsh et al. (2015). The tasks consists of 10 runs. During each run, participants are given a beginning of a sentnece such as “in summer…”. Participants then have 9 seconds to complete this sentence in their mind (preparation) and another 12 seconds to write down the complete sentence on a sheet of paper in front of them (write). These 10 runs are repeated multiple times for different conditions. The conditions comprise self: completing or writing the sentence as yourself; Tulpa: letting the tulpa complete or write the sentence; and a control condition friend: imagine a friend completing or writing the sentence.

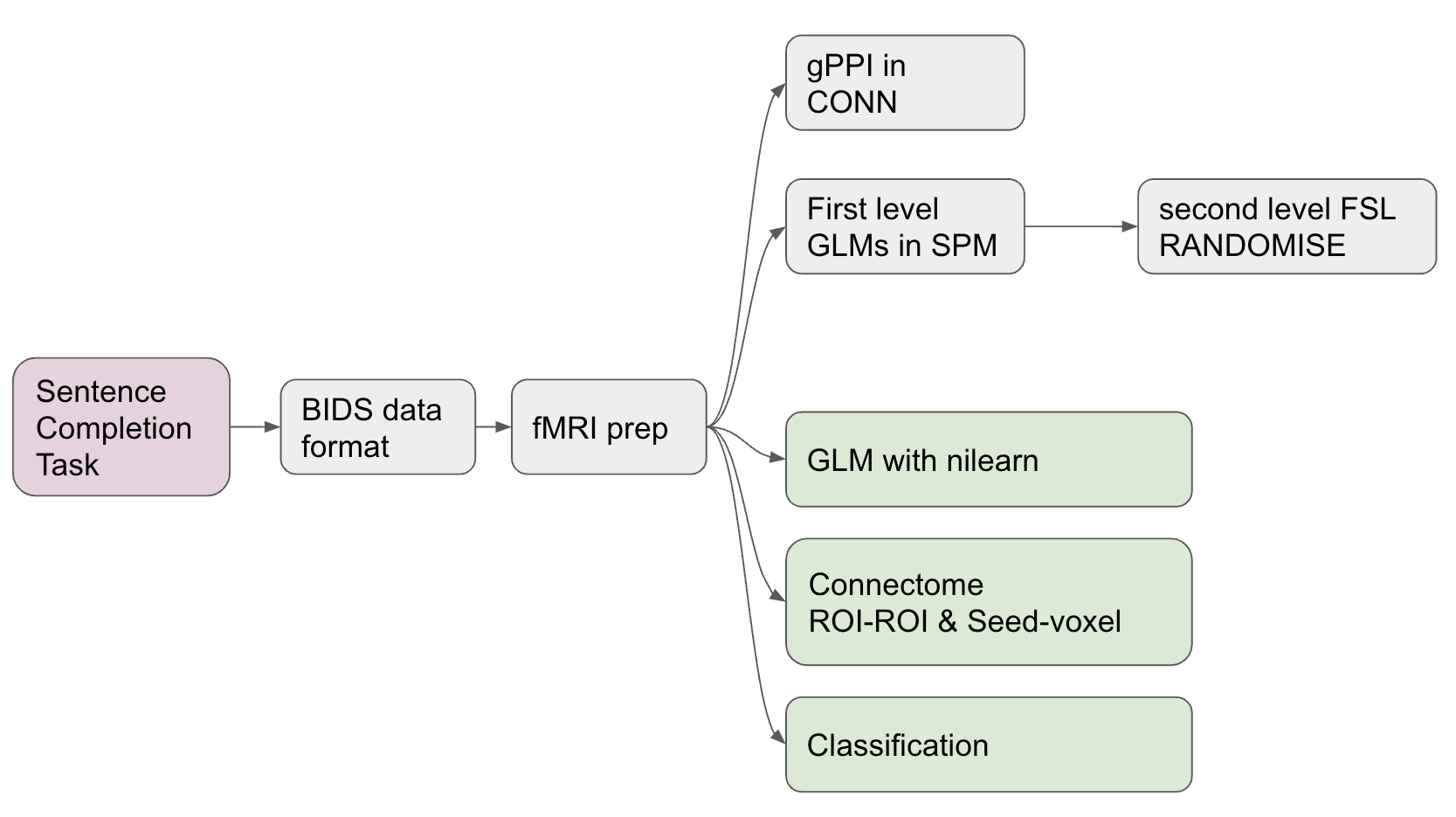

The image below depicts an overview of the analysis. The grey boxes have already been completed prior to the brainhack school (BIDS organisation, preprocessing with fmriprep, gPPI in CONN, and GLM in SPM and FSL). The green boxes are the main focus of the brainhack school coding sprint (GLM with Nilearn, Connecttome analysis, Classification).

protocol of the sentence completion task

The data was preprocessed with fmriprep before any of the analyses were carried out.

Deliverables

In addition to the deliverables below, you can find a more extensive report of this project on this website.

The date of publishing the final results on this [website](https://jonasmago.github.io/brainhack2023/intro.html) is depending on final confirmatino of the principal investigator of this study.

You can also find all the code used for the results below in this github repo.

Progress overview

The brainhack school provided four weeks of space during which I could work full time on the analysis of this Tulpa dataset. At the end of these four weeks I was able to complete a first draft of all major analyses intended. These analyses include:

- GLM (first and second level).

- Task related connectivity.

- ML classifier to differentiate conditions using the connectome;

- A deep learning decoding appraoch using PyTorch to distinguish the task conditions.

Tools I learned during this project

This project was intended to upskill in the use of the following

Nilearnto analyse fMRI data in python.Scikit-learnto realise ML classification tasks on fMRI related measures.Py Torchto implement a brain decoder.Jupyter {book}andGithub pagesto present academic work online.Markdown,testing,continuous integrationandGithubas good open science coding practices.

Results

Deliverable 1: project report

You are currently reading the project report.

Deliverable 2: project website

In addition to this report, I made a github website. However, please note that the website does not currently show the full report of this project as the publication is waiting for final approval from the Principal Investigator of this project.

This project website was created using Jupyter {books}. This format allows for a potential submission to neurolibre. Neurolibre is a preprint server for interactive data analyses.

Deliverable 3: project github repository

The repository of this project can be found here. The objective was to create a processing pipeline for ECG and pupillometry data. The motivation behind this task is that Marcel’s lab (MIST Lab @ Polytechnique Montreal) was conducting a Human-Robot-Interaction user study. The repo features:

- a video introduction to the project.

- a presentation made in a jupyter notebook on the results of the project.

- Notebooks for all analyses.

- Detailed requirements files, making it easy for others to replicate the environment of the notebook.

- An overview of the results in the markdown document.

Deliverable 4: GLM results

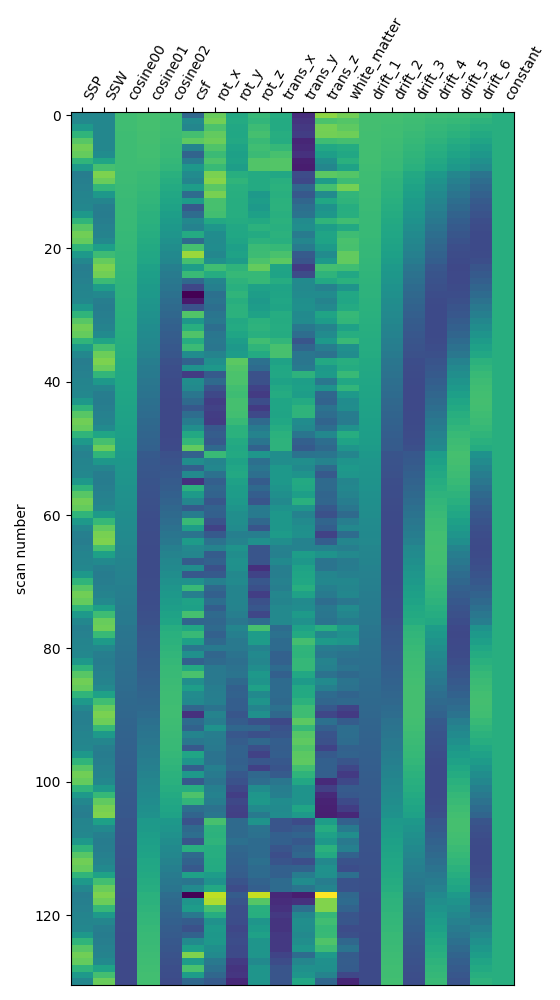

I have used Nilearn to compute a GLM that compares different conditions of the task. Specifically, I am interested in teh respective contrasts between self, tulpa, and friend. I am interested in these contrasts for the preparation and writing phase respectively. I have computed the GLM on fmriprep prepreocessed data. I have then created a design matrix that integrates the timing files (first two columes) as well as well as some movement regressors (see figure below). To incorporate the movement regressors I have used the load_confounds_strategy from nilearn with the following parameters: denoise_strategy="scrubbing", motion="basic", wm_csf="basic".

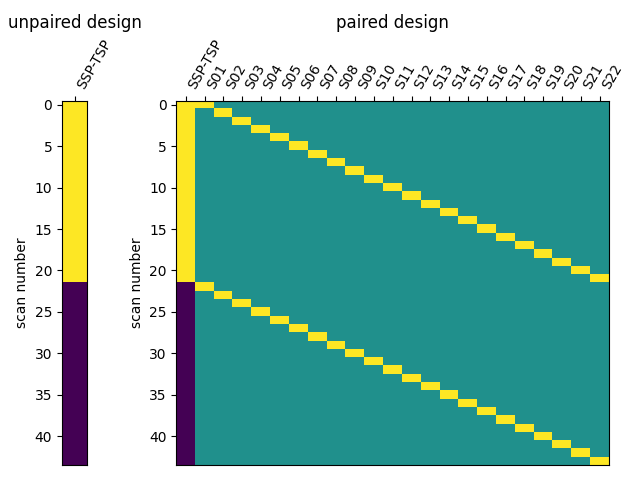

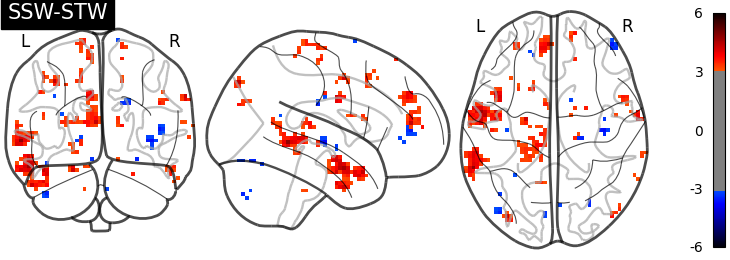

I have then computed a second level analysis that combines the individual beta maps into a group level contrast between the different conditions (see design matrix below). As a result I receive z-scores of each voxel, indicating how much that voxel differs across the two compared condition. The plot below displays these z-scores for comparing the self-write with the tulpa-write condition. A z-score of 3.0 is equivalent to a 99% confidence interval, a z-score of 2.3 is equivalent to a 95% confidence interval.

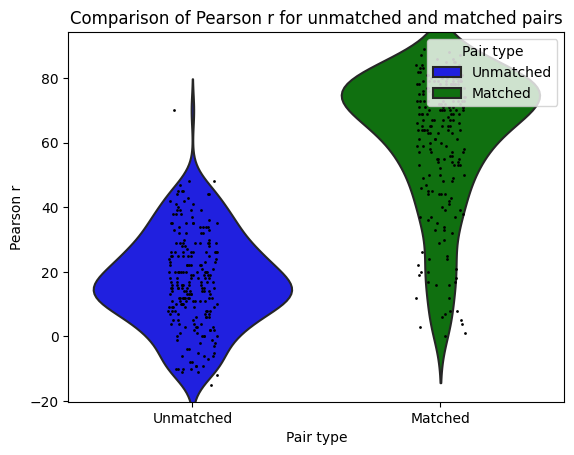

I then used Neuro Maps to compute the pearson-r correlation between the first level outputs computed with Nilearn to those I previously computed with SPM. To test for the significance of these comparisons, I re-computed the pearson-r for random pairs. The r values of the true (matched) pairs have a mean of 61.32 while the mean of the random (unmatched) pairs is 17.09. A two-sample t-test revleaed that this difference is significant: T-stat: -26.24 and a p-value=0.000.

ℹ️ matched pairs are between software, within subject, within contrast, within run.

ℹ️ unmatched pairs are between software, between subject, between contrast, between run

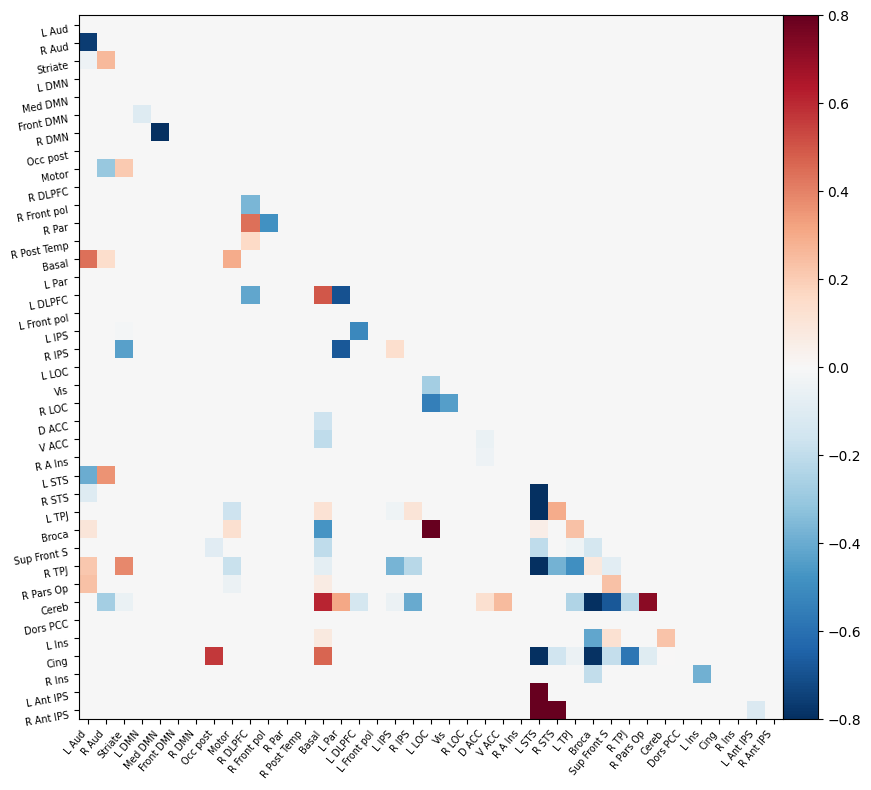

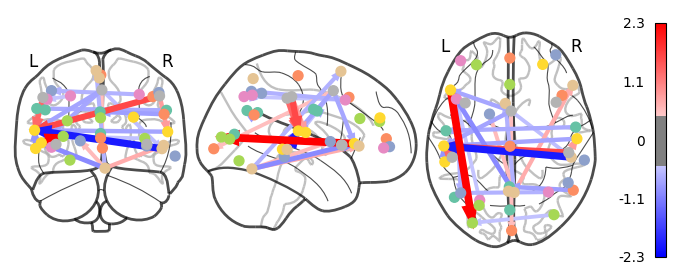

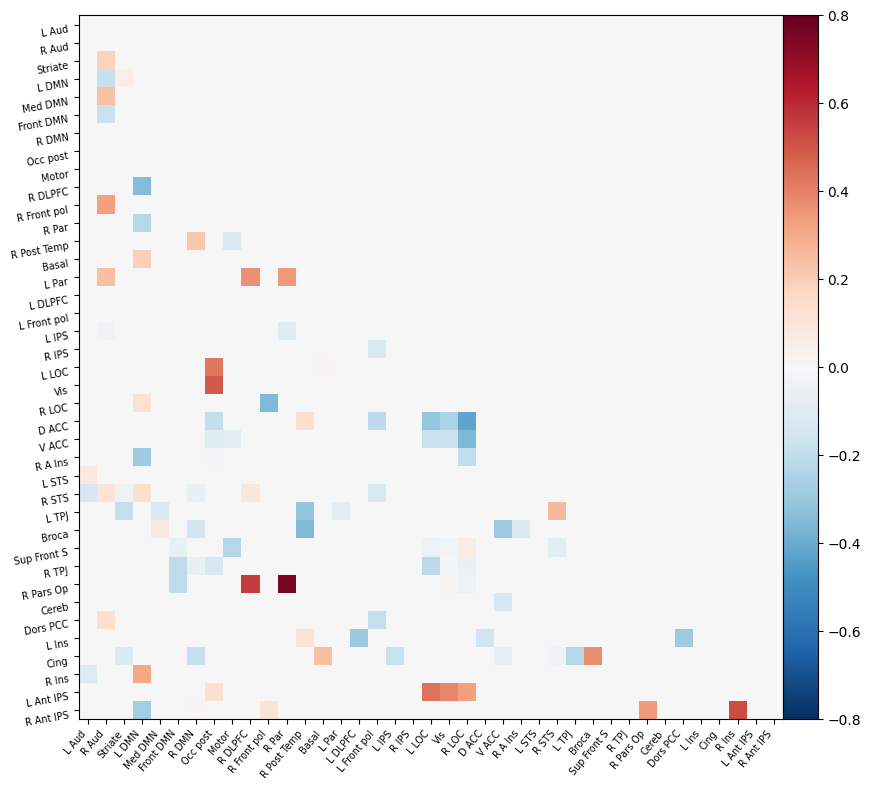

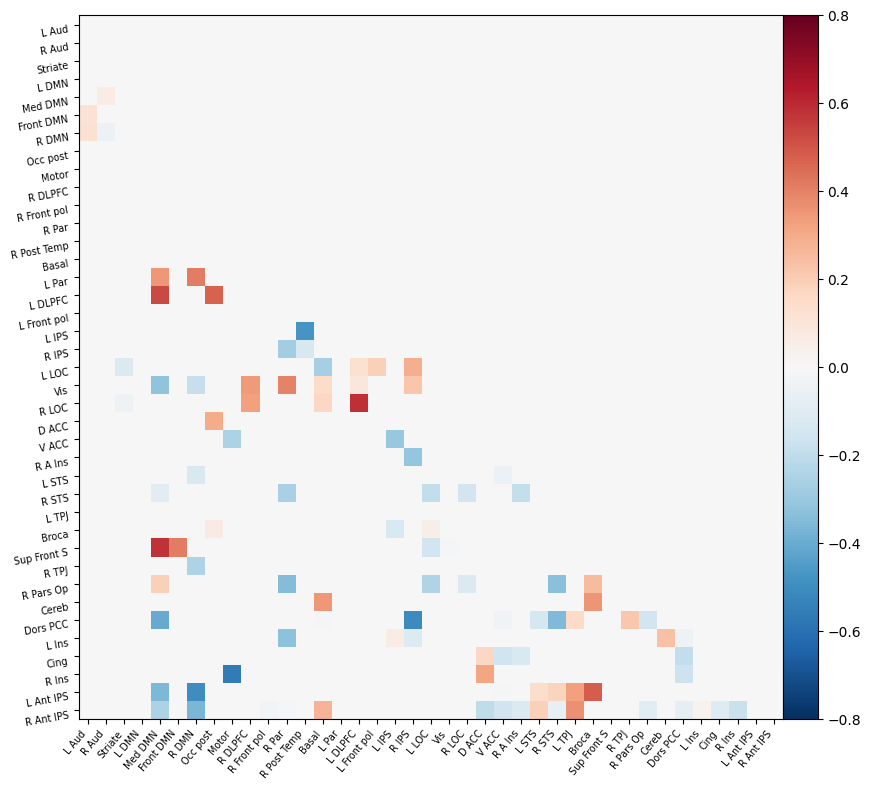

Deliverable 5: Connectome results

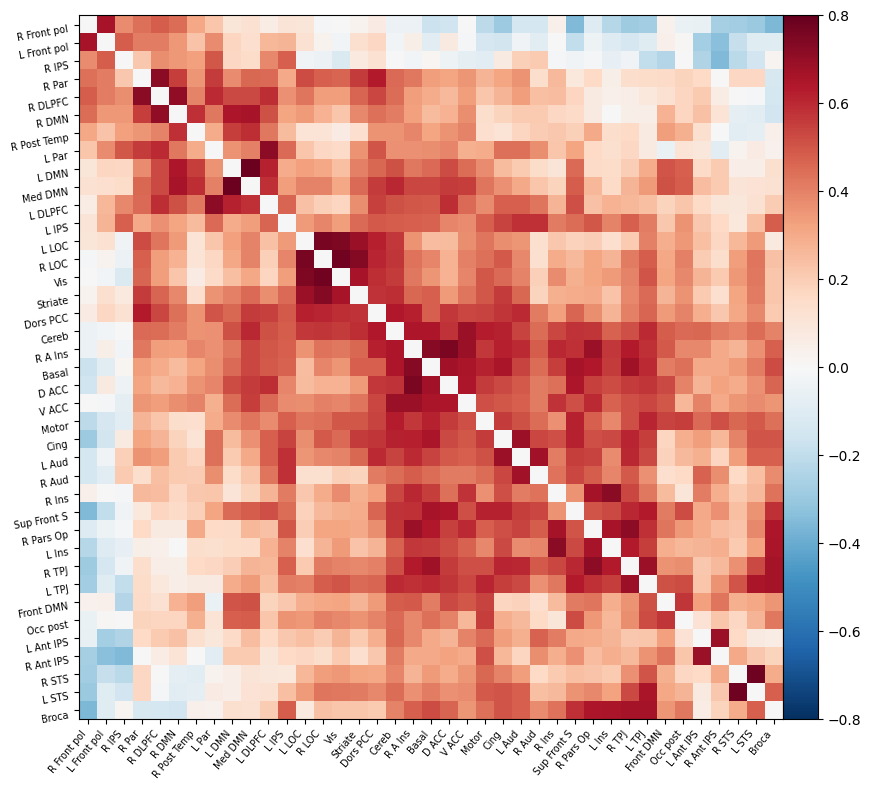

I have parcellated the brain into 39 regions based on the probabilistic msdl atlas. A probabilistic atlas assigns a probability to each voxel, indicating how likely it is that that voxel belongs to a specific region. I have then computed the correlation between all of these 39 regions, resulting into a connectome of shape 39*39. Given that that the correlation measure is none directive, the upper and lower triangle of the connectome mirror each other. Below is an example of a connectome for one subject and one condition.

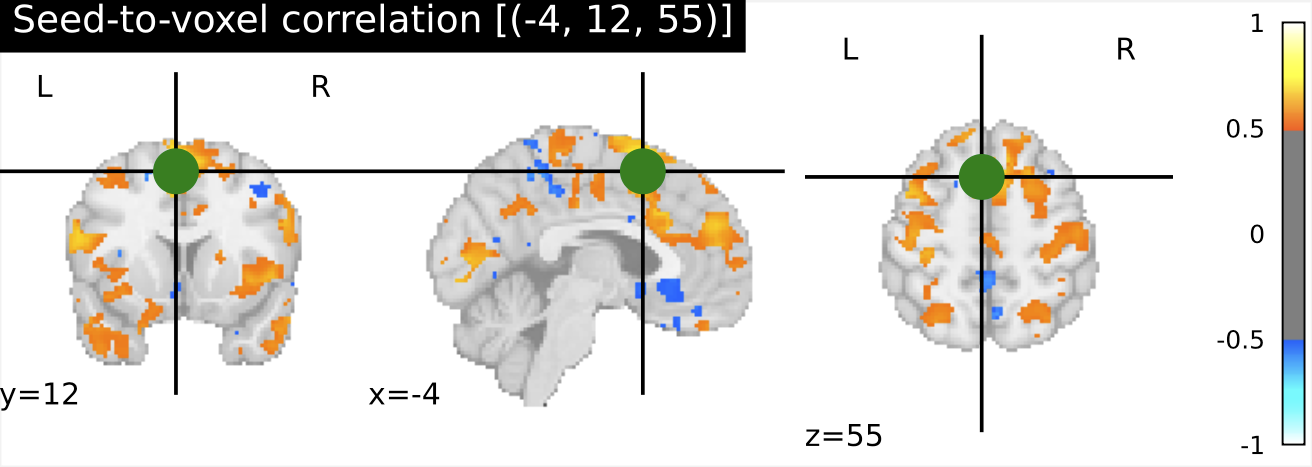

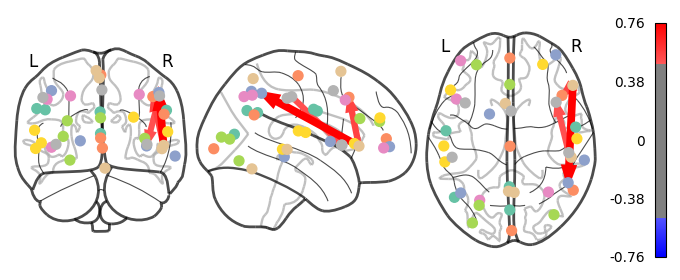

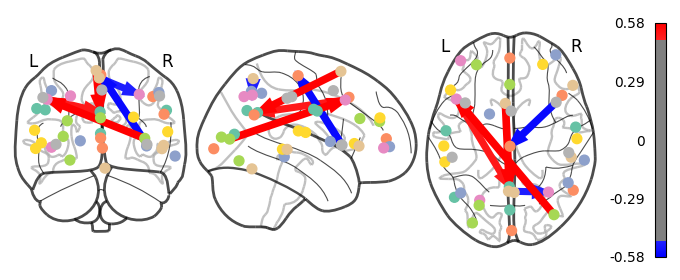

Deliverable 6: Seed-to-Voxel connectivity results

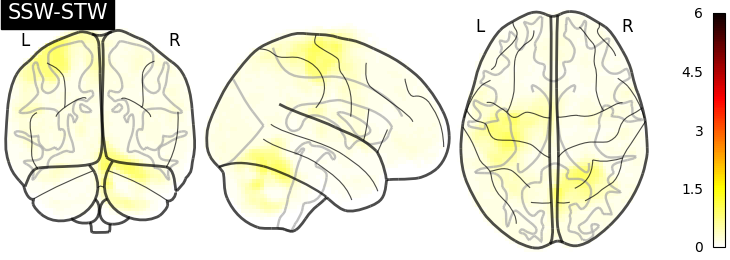

I have previously computed a gPPI for this dataset using CONN. I wanted to replicate this task dependent seed to voxel correlation using Nilearn. I have specified the seed based on the group peak activation in the SMA, using the results from the SPM and Randomise analysis. Seed coordination in MNI space are (-4, 12, 55). I have then constructed a sphere around this voxel with a radius of 5 mm. Using this ROI, correlations to all voxels based by task condition were computed. Below is an illustrative example of the connectivity values for one subject and one condition.

Deliverable 7: ML-classifier results

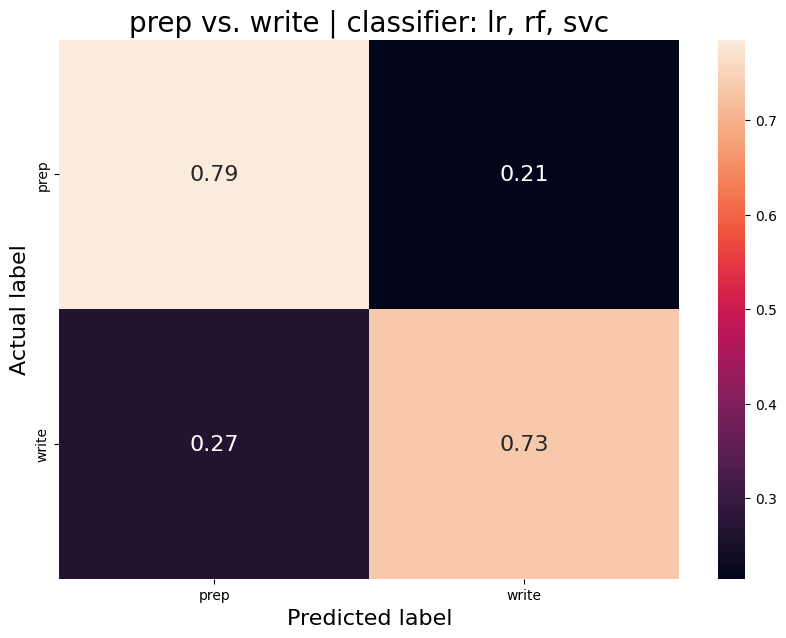

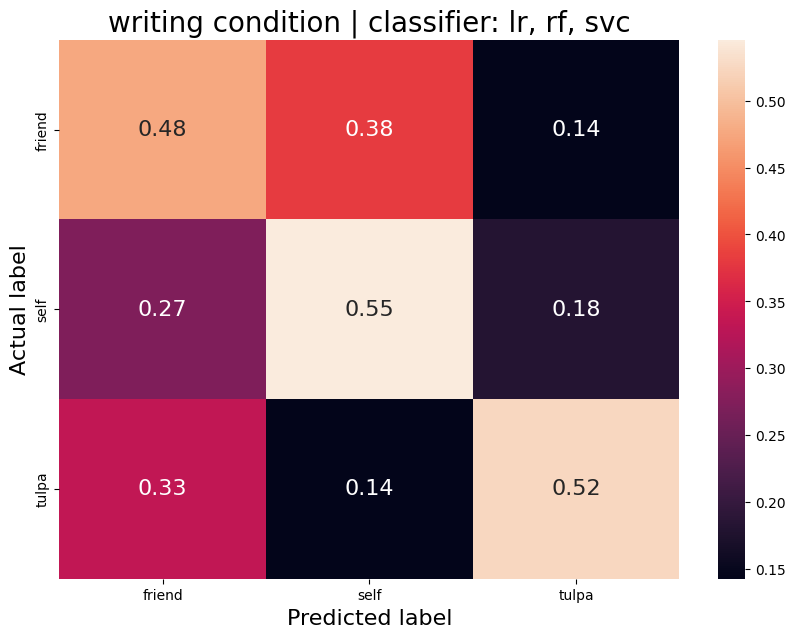

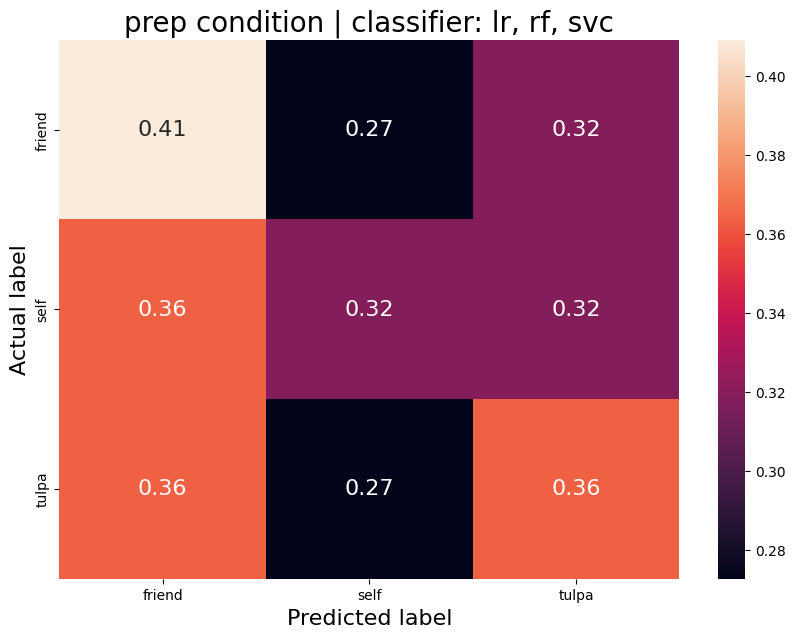

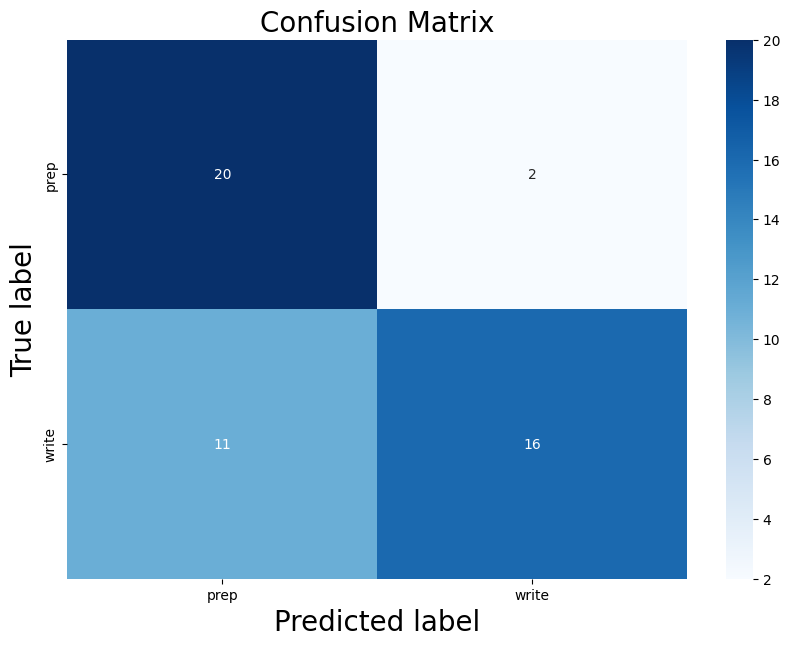

The aim was to train a classifier that can accurately distinguish between different task conditions. I used a majority vote ensemble classifier that combines LogisticRegression, RandomForestClassifier, and a SVC. When classifying the conditions prep vs. write, the classifier achievs an acciracy of ~80%, so well above chance. However, this is not surprising as the writing conditions will have muhc stronger motor cortex activation and the two conditions are quite different. I then classified the self, tulpa, and friend conditions for preparation and writing respectively. In both conditions, we have an accuracy of ~51% for this 3-group classification problem. Given the three groups, chance levels are at 33.3%, thus an accuracy of 51% is well above chance, YAY!

ℹ️ all classifiers were trained and tested on the connectome. Next, the plan is to train classifiers on the beta maps of the GLM. See my to-do list for further details.

Prep-write condition

Average accuracy = 0.76

P-value (on 100 permutations): p=0.00

Write condition

Average accuracy = 0.52

P-value (on 100 permutations): p=0.02

Prep condition

Average accuracy = 0.37

P-value (on 100 permutations): p=0.32

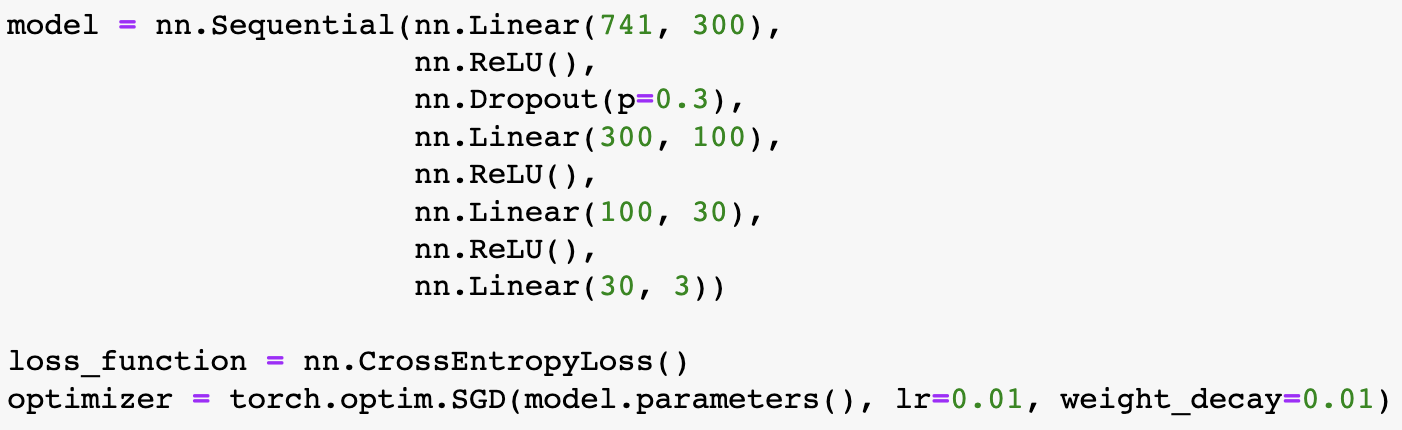

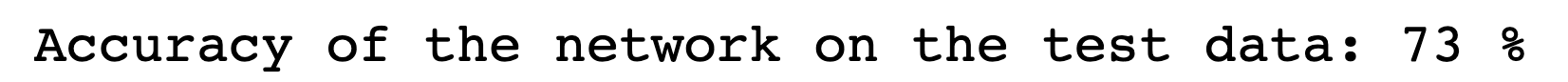

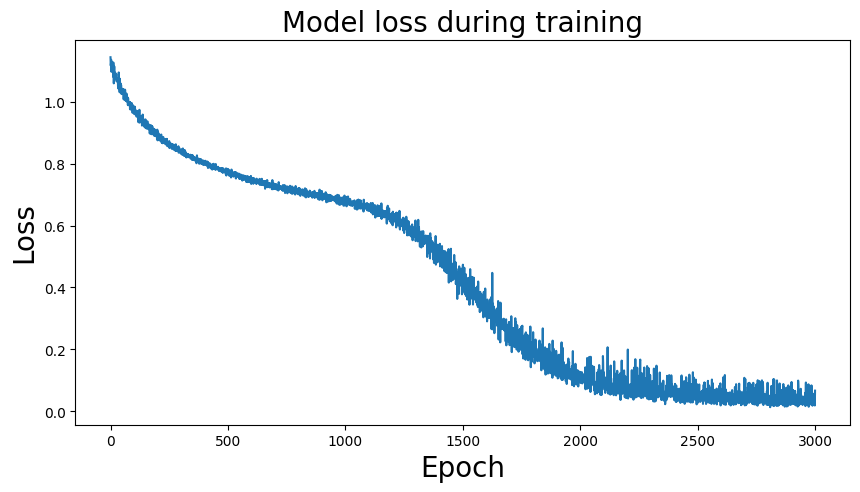

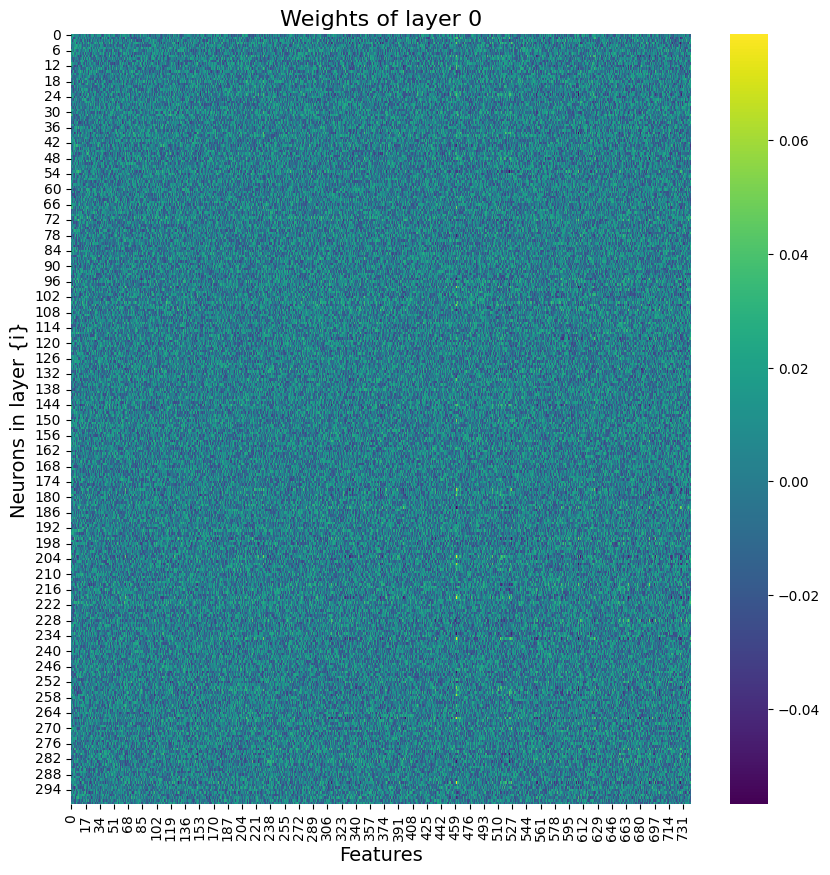

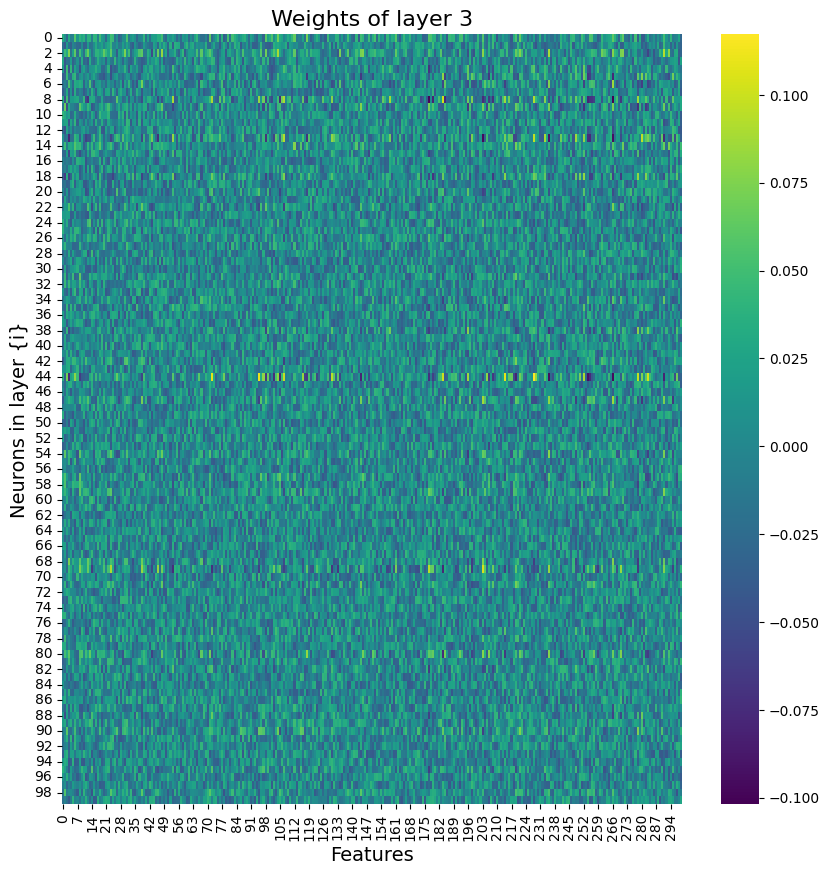

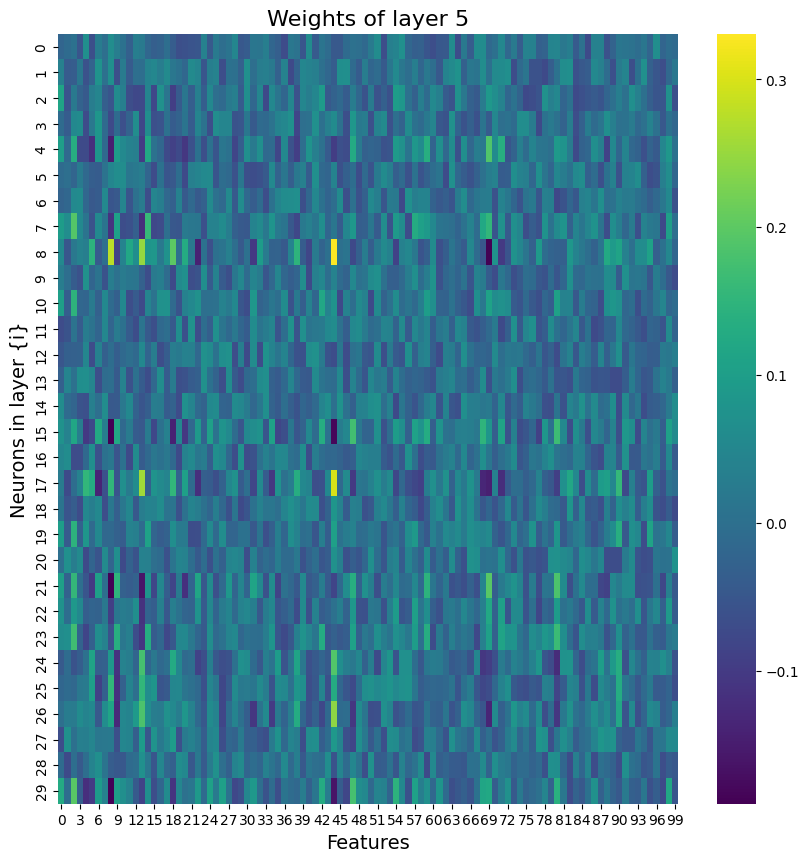

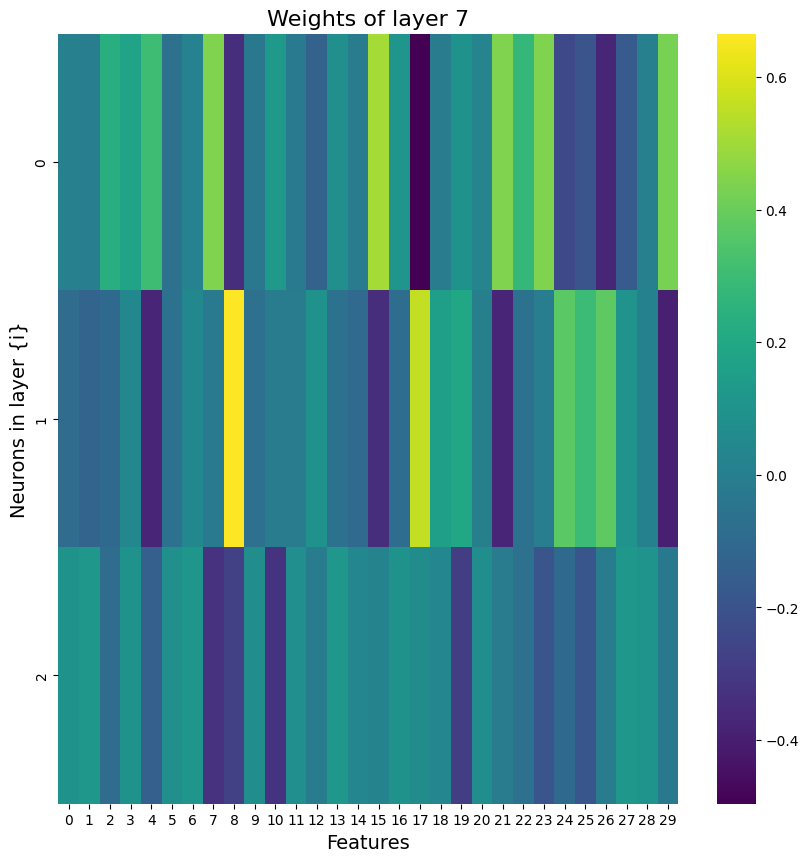

Deliverable 8: Deep neural network encoding results

Building on what I have done with the ML classifiers, I wanted to explore if I could achieve the same resutls with a Neural Netowrk. I used PyTorch to build a Multilayer Perceptron with 4 linear layers, 3 rectified linear unit, and one dropout layer with a threshold of 0.3. I then trained this model with a learning rate of 0.01 and a weight decay of 0.01. The results show an accuracy of 0.73 which is simialr, though slightly lower, than the accuracy of the scikit learn ensemble classifier of 0.76.

Below you can see the definitino of the model, the confusion matrix, the learning rate of the model, as well as the weights of the trained model.

results of the neural network

My to-do list

- preprocess the fMRI data with fmriprep

- run GLMs using SPM for the first level and FSL Randomise for the second level

- replicate GLM in Nilearn

- replicate a GLM in Nilearn

- compute the correlation between first-level beta maps in the SPM and Nilearn analysis

- replicate the Nilearn GLM with the exact same parameters as in SPM

- fd_threshold = 1 (default is 0.5mm)

- change from

load_confound_strategytoload_confoundas it’s more custamizable and allows to match parameters of SPM. - check if I used a high pass filter in SPM, if not deactivate it in Nilearn.

- check if I standardized in SPM, if not deactivte it in Nilearn.

- Cluster correct the Nilearn second-level contrasts using

cluster_level-inference. - re-run the GLMs including a brain mask that excludes the cerebellum and brain stem. As we are not interested in the analysis of these regions their exclusion is legit. I can use a mask from brainvolt that I can download here.

- connectivity analysis

- compute connectome for all conditions and save as

.npy - compute

graph theorymetrics to characterise and compare the connectomes. François Paugam recommended to look into the Networks of the Brain as an introduction.

- compute connectome for all conditions and save as

- compute seed-to-voxel correlations

- ML classifier

- ML classifier on connectomes

- ML classifier on seed-to-voxel maps

- ML classifier on the average activity for each of the 10 trials (not entire runs) cropped to an ROI in the SMA

- Deep Neural Network Decoder using PyTorch

Feedback from the final presentation

- visualise things in MRIcroGL, soon implemented in nilearn as `niview` - change my pearson-r values to [-1, 1] (currently [-100,100]) - for comparing nilearn and spm beta maps: specify not-matched. E.g.: - between software, within subject, within contrast, within run - between software, between subject, between contrast, between run - feature selection must be either on (A) train set or (B) integrated in cross-validation. Make sure it's not selecting featuers on the entier dataset --> danger of overfitting! - r2 is a measure for the percentage of variance explained. Not relevant here, thus, remove it. - explain permutation test for significance testing. - apply the classifer on beta maps per trials (there are 10 trials per run). I can use ROIs or entire beta masks. The model will take care of the many variables. - try a searchlight approach: train a classifer on different ROIs or voxels and then compare the accuracy. This could lead to an accuracy map with voxel resolution, however, this is computationally expensive. - it's important to **de-mean** my data before doing this (e.g. use z-score). - look into **stratified cross validation** to deal with multiple runs per subject in the classifier. If I use stratification this shouldn't be a problem.Tipps and Tricks

useful hacks, and notes for next iterations of this project

- Plotting results

- plotly

- histograms are powerful because they don’t collapse either time or space

- raindrop plots are powerful as they show all individual points

- examples in the python graph gallery

- Python virtual environment

- To make and actiavte a python virtual environment, use the following two lines:

python3 -m venv venvsource venv/bin/activate

- To generate a

requirements.txtfile- Option 1:

pip freeze. - Option 2: Pipreqs automatically detects what libraries are actually used in a codebase.

- Option 1:

- To make and actiavte a python virtual environment, use the following two lines:

- Python local pip install

- make sure to have an init.py file in the src directory

- also make sure to have a setup.py file

- Navigate to root directory and run:

pip install -e .

- Python ipykernal allows to install kernals from python and conda environments

python -m ipykernel install --user --name=<my_env>

- VIM: create and edit python files in the Terminal

vim <file name>.py%ssearch and replaceiinsertion mode- doesn’t leave a mess in the terminal after closing

- alternative to

vimisnano

- Cookiecutter as template for repos.

- Debugging scripst

breakpoint()can replace `import pdb; pdb.set_trace()``python -m pdb <script name>runs a script with pdb. presscfor continue when the script is loaded. This will allow you to be in the pdb when the code crashes.

- Neuro Maps allows to compare the pearsonr and p-value between two nifti files

- The Good Research Blog shows how to do a local pip install (and much more)

- writing good code

- github copilot

- sourcery automatically suggests improvements

- can I describe the function in one line

About the Author

Name: Jonas MagoContact: jonas.h.mago@gmail.comAffiliation: McGill UniversityBackground: My background is in neuroimaging (EEG, fMRI), cognitive sciecnes, Active Inference, and Philosophy. After a BA in Liberal Arts and Sciences at the University College at Maastricht University and an MSc in Mind, Language, and Embodied Cognition at the University of Edinburgh, I am now pursuing my PhD at McGill University.General Interests: I am using the Active Infernece framework to study the predictive and bayesian mechanics undrelying contemplative practices such as prayer, meditation, and psychedlics. Specifically, I work with (A) expert meditators from the Theravaden tradition that are able to enter absorption states called Jhana at will, (B) Charismatic Christian Prayer practitioners, and (C) experiences of entity encounters under the influnece of DMT.